OpenAI is a non-profit artificial intelligence research company. Their goal is to advance digital intelligence in the way that is most likely to benefit humanity as a whole, unconstrained by a need to generate financial return. Since their research is free from financial obligations, they can better focus on a positive human impact.

Table of Contents

What are people using OpenAI for?

Small businesses and large companies can rely on OpenAI API to automate repetitive tasks and improve their customer service in the long run. With OpenAI API’s ability to process large volumes of data and provide accurate results in real-time, this technology offers businesses a competitive edge.

Does OpenAI collect user data?

On the website of OpenAI, it has been stated that “We may automatically collect information about your use of the Services, such as the types of content that you view or engage with, the features you use and the actions you take, as well as your time zone, country, the dates and times of access, user agent and version, type of computer or mobile device, and your computer connection.”

“Yes. While OpenAI will not use data submitted by customers via our API to train or improve our models, you can explicitly decide to share your data with us for this purpose.”

What did OpenAI update in its usage policy?

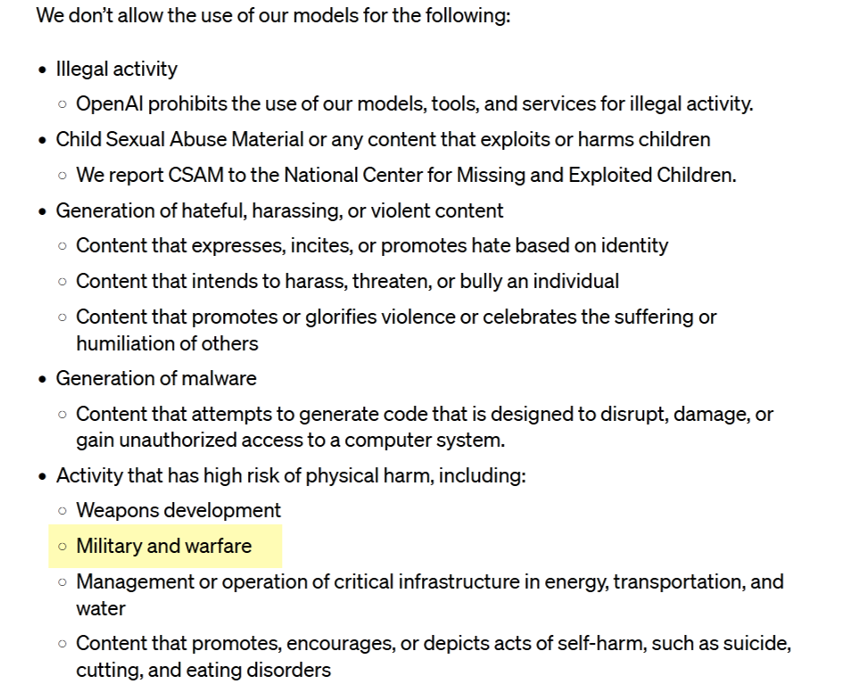

OpenAI has quietly updated its usage policy, removing explicit bans on military applications such as ‘weapons development’ and ‘military and warfare.’ While OpenAI spokesperson Niko Felix highlighted the universal principle of avoiding harm, concerns have been raised about the ambiguity of the new policy regarding military use.

Before the alteration was done on January 10, the usage policy specifically disallowed the use of OpenAI models for weapons development, military and warfare, and content that promotes, encourages, or depicts acts of self-harm.

Up until at least Wednesday, OpenAI’s policies page specified that the company did not allow the usage of its models for “activity that has high risk of physical harm” such as weapons development or military and warfare.

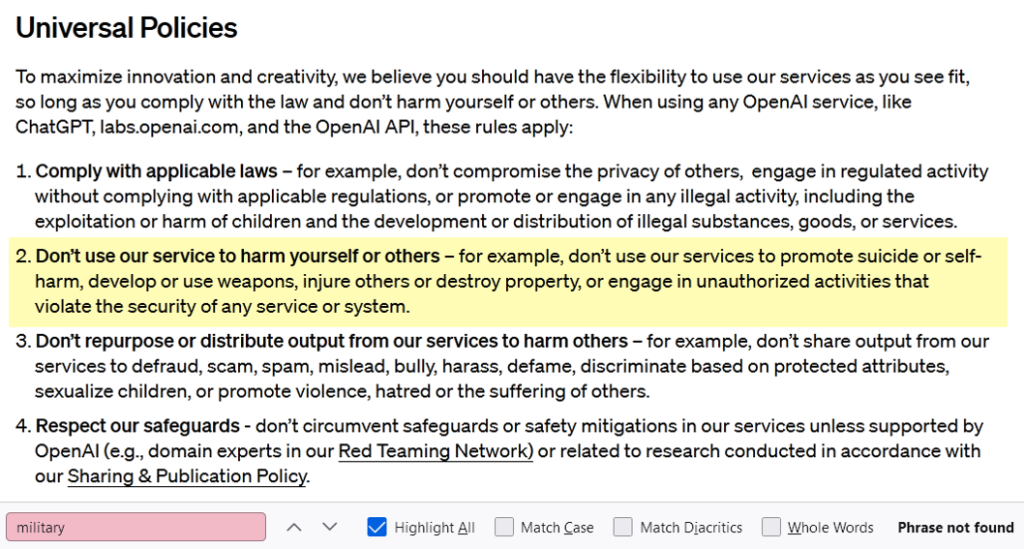

However, OpenAI has revised its policy and removed the specific reference to the military, although its policy still states that users should not “use our service to harm yourself or others,” including to “develop or use weapons.”

In an interview, the spokesperson of OpenAI said, “Our policy does not allow our tools to be used to harm people, develop weapons, for communications surveillance, or to injure others or destroy property, There are, however, national security use cases that align with our mission.

For example, we are already working with DARPA to spur the creation of new cybersecurity tools to secure open-source software that critical infrastructure and industry depend on. It was not clear whether these beneficial use cases would have been allowed under ‘military’ in our previous policies. So the goal with our policy update is to provide clarity and the ability to have these discussions.”

OpenAI’s collaboration with Microsoft, which has invested $13 billion in the language model (LLM) maker, adds complexity to the discussion, particularly as militaries worldwide seek to integrate machine learning techniques into their operations.

Many people are considering this update in the policy of OpeanAI as a weak stance when working with the military. Many people have also predicted that this stance will further degrade and weaken up with time.

These changes in the policy of OpenAI have come when many military organizations including the Pentagon have shown their interest in large language models, like ChatGPT.

Giving the responsibility of the defense and military into the hands of an AI tool has spread somewhat havoc in the minds of people. Many people are comparing this to the Terminator movie and trying to emphasize that using AI in such a sensitive and powerful department might lead to something tragic.

“OpenAI is well aware of the risk and harms that may arise due to the use of their technology and services in military applications,” said Heidy Khlaaf, engineering director at the cybersecurity firm Trail of Bits and an expert on machine learning and autonomous systems safety.

Khlaaf added that the new policy seems to emphasize legality over safety. “There is a distinct difference between the two policies, as the former clearly outlines that weapons development and military and warfare is disallowed, while the latter emphasizes flexibility and compliance with the law,” she said.

“Developing weapons, and carrying out activities related to military and warfare is lawful to various extents. The potential implications for AI safety are significant. Given the well-known instances of bias and hallucination present within Large Language Models (LLMs), and their overall lack of accuracy, their use within military warfare can only lead to imprecise and biased operations that are likely to exacerbate harm and civilian casualties.”

“Given the use of AI systems in the targeting of civilians in Gaza, it’s a notable moment to make the decision to remove the words ‘military and warfare’ from OpenAI’s permissible use policy,” said Sarah Myers West, managing director of the AI Now Institute and a former AI policy analyst at the Federal Trade Commission. “The language that is in the policy remains vague and raises questions about how OpenAI intends to approach enforcement.”

Stay at the forefront of technology’s latest innovations and trends by following The Techinsider blog. Dive into concise insights, expert analysis, and breaking news to stay ahead in the dynamic world of technology. Join us as we embark on a journey to explore the exciting future of tech together!

Also if you don’t have knowledge about AI and want to learn more about them then make sure to check out this blog by The Techinsider i.e. Artificial Intelligence’s Adaptive Intelligence and Cognitive Computing.